If you talk to people about the potential of artificial intelligence, almost everybody brings up the same thing: the fear of replacement. For most people, this manifests as a dread certainty that AI will ultimately make their skills obsolete. For those who actually work on AI, it usually manifests as a feeling of guilt – guilt over creating the machines that put their fellow humans out of a job, and guilt over an imagined future where they’re the only ones who are gainfully employed.

In recent months, those uneasy feelings have intensified, as investment and innovation in generative AI have exploded. A relatively new innovation in machine learning called diffusion models brought text-to-image generation to maturity. A wave of AI art applications like Midjourney and Stable Diffusion have made a huge splash, and Stability AI has raised $101 million. Meanwhile, Jasper, a company that uses AI to generate written content, raised $125 million. In an era when much of the tech industry seems to be down in the dumps, AI is experiencing a golden age. And this has lots of people worried.

To put it bluntly, we think the fear, and the guilt, are probably mostly unwarranted. No one knows, of course, but we suspect that AI is far more likely to complement and empower human workers than to impoverish them or displace them onto the welfare rolls. This doesn’t mean we’re starry-eyed Panglossians; we realize that this optimistic perspective is a tough sell, and even if our vision comes true, there will certainly be some people who lose out. But what we’ve seen so far about how generative AI works suggests that it’ll largely behave like the productivity-enhancing, labor-saving tools of past waves of innovation.

AI doesn’t take over jobs, it takes over tasks

If AI causes mass unemployment among the general populace, it will be the first time in history that any technology has ever done that. Industrial machinery, computer-controlled machine tools, software applications, and industrial robots all caused panics about human obsolescence, and nothing of the kind ever came to pass; pretty much everyone who wants a job still has a job. As Noah has written, a wave of recent evidence shows that adoption of industrial robots and automation technology in general is associated with an increase in employment at the company and industry level.

That’s not to say it couldn’t happen, of course – sometimes technology does totally new and unprecedented things, as when the Industrial Revolution suddenly allowed humans to escape Malthusian poverty for the first time. But it’s important to realize exactly why the innovations of the past didn’t result in the kind of mass obsolescence that people feared at the time.

The reason was that instead of replacing people entirely, those technologies simply replaced some of the tasks they did. If, like Noah’s ancestors, you were a metalworker in the 1700s, a large part of your job consisted of using hand tools to manually bash metal into specific shapes. Two centuries later, after the advent of machine tools, metalworkers spent much of their time directing machines to do the bashing. It’s a different kind of work, but you can bash a lot more metal with a machine.

Economists have long realized that it’s important to look at labor markets not at the level of jobs, but at the level of tasks within a job. In their excellent 2018 book Prediction Machines, Ajay Agrawal, Joshua Gans, and Avi Goldfarb talk about the prospects for predictive AI – the kind of AI that autocompletes your Google searches. They offer the possibility that this tech will simply let white-collar workers do their jobs more efficiently, similar to what machine tools did for blue-collar workers.

Daron Acemoglu and Pascual Restrepo have a mathematical model of this (here’s a more technical version), in which they break jobs down into specific tasks. They find that new production technologies like AI or robots can have several different effects. They can make workers more productive at their existing tasks. They can shift human labor toward different tasks. And they can create new tasks for people to do. Whether workers get harmed or helped depends on which of these effects dominates.

In other words, as Noah likes to say, “Dystopia is when robots take half your jobs. Utopia is when robots take half your job.”

Comparative advantage: Why humans will still have jobs

You don’t need a fancy mathematical model, however, to understand the basic principle of comparative advantage. Imagine a venture capitalist (let’s call him “Marc”) who is an almost inhumanly fast typist. He’ll still hire a secretary to draft letters for him, though, because even if that secretary is a slower typist than him, Marc can generate more value using his time to do something other than drafting letters. So he ends up paying someone else to do something that he’s actually better at.

Now think about this in the context of AI. Some people think that the reason previous waves of innovation didn’t make humans obsolete was that there were some things humans still did better than machines – e.g. writing. The fear is that AI is different, because the holy grail of AI research is something called “general intelligence” – a machine mind that performs all tasks as well as, or better than, the best humans. But as we saw with the example of Marc and the secretary, just because you can do everything better doesn’t mean you end up doing everything! Applying the idea of comparative advantage at the level of tasks instead of jobs, we can see that there will always be something for humans to do, even if AI would do those things better. Just as Marc has a limited number of hours in the day, AI resources are limited too – as roon likes to say, every time you use any of the most advanced AI applications, you’re “lighting a pile of GPUs on fire”. Those resource constraints explain why humans who want jobs will find jobs; AI businesses will just keep expanding and gobbling up more physical resources until human workers themselves, and the work they do to complement AI, become the scarce resource.

The principle of comparative advantage says that whether the jobs of the future pay better or worse than the jobs of today depends to some degree on whether AI’s skill set is very similar to humans, or complementary and different. If AI simply does things differently than humans do, then the complementarity will make humans more valuable and will raise wages.

And although we can’t speak to the AI of the future, we believe that the current wave of generative AI does things very differently from humans. AI art tends to differ from human-made art in subtle ways – its minor details are often off in a compounding uncanny valley fashion that the net result can end up looking horrifying. Anyone who’s ridden in a Tesla knows that an AI backs into a parallel parking space differently than a human would. And for all the hype regarding large language models passing various forms of the Turing Test, it’s clear that their skillset is not exactly the same as a human’s.

Because of these differences, we think that the work that generative AI does will basically be “autocomplete for everything”.

How the best generative AI apps work now

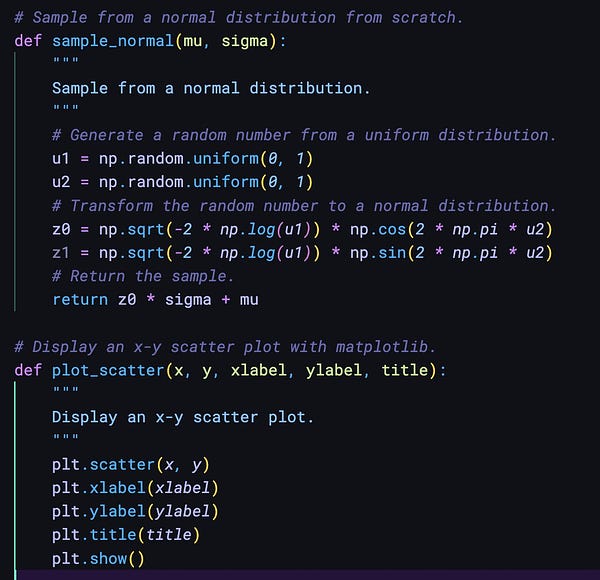

The most profoundly impactful generative AI application to come out thus far is a tool aptly called GitHub Copilot. Recently, “large language models” or “LLMs” have been of great interest due to their broad prowess at general knowledge and simple reasoning tasks. At training time, they study a vast corpora of text scraped from the internet, and gain an intelligence as broad in scope as the source material itself. Powered by an LLM trained on all publicly available computer code on the internet, Copilot is able to suggest the next few lines of code for a programmer based upon what he or she has already written.

This has fundamentally changed the nature of what software engineers do. Whereas before a software engineer had to remember, search for, or infer all low level functionalities of their program, they can now describe *in plain language* what they would like a snippet of their program to do, and if it’s within the capacity of the language model, synthesize it from nothing!

As in the example above, engineers can simply ask for a piece of code to accomplish a minor task and in many cases, successfully summon it from a machine intelligence. This has profound implications for how easy it is to learn a new programming language or write unfamiliar programs with limited experience. In effect, no software engineer will be running solo anymore: everyone from students writing their first computer code to industry experts will have a superhumanly knowledgeable, but idiosyncratic pair programmer built into their tools.

A certain layer of the software value chain has become vastly enhanced by capital in the last few months. An engineer dreams of a large project – they reason about the business task at hand at a high level and break it down into smaller subproblems – but the lowest level of implementation, the acts of remembering or learning thousands of programming languages and frameworks and incantations thereof, has become much easier. If you have an idea like, “I want to turn this dataset into a scatter plot”, the AI is more than capable of remembering the right way to do this. It doesn’t always get it perfectly right, but it almost always makes a good enough first attempt where a human can go and work out the granular reasoning errors, or modify it to their preference. This is complementarity: humans are good at reasoning about business logic, but their brains aren’t built to remember an enormous environment of implementation details. As such, Copilot will not only raise the productivity of individual programmers but create more programmers generally.

Another one of the most popular generative AI applications is the art app Midjourney. Born in the forum culture of Discord, Midjourney takes text descriptions and turns them into convincing digital art. First, you enter a prompt and MJ produces a selection of four low resolution image samples. Then, you pick which one you like the most and MJ goes and pours more resources into it to create a high resolution piece of digital art. The level of large-scale coherence and compositional understanding of these models is immediately striking. But if you look closely, you’ll find that several details will be off – Midjourney tends to screw up characters' hands. They may have too many or too few fingers. Window panes may not line up. Moreover, Midjourney isn’t dreaming up “Guernica” – there’s a limit of narrative abstraction to the text prompt after which it loses its grip and starts spitting out nonsense. It’s up to the artist to distill a creative motive, an inspiration, into simpler ideas a machine can understand, and iterate on this. At the moment, prompting is an inexact science where a human must find the right way to communicate intent to an AI – a problem not dissimilar to difficulties of delegating to other humans! Then finally, the artist must fix up any details the AI may have screwed up.

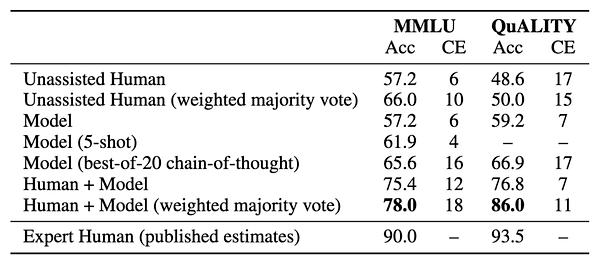

These are just two examples, but they seem to point to a general regularity in how the new crop of generative AI apps function. Generative AI is quite good at certain parts of the value chain of knowledge work, but thinks quite differently from humans. Anthropic, a company that focuses on understanding how AI works, has found that humans working side-by-side with expert AI assistants to perform various tasks produce superior performance compared to either the AI or a human alone:

One possible danger of this line of thought is that AI iterates rapidly: every 12 months brings about technological advances that would’ve seemed alien just shortly prior. The capacity of these models and the scope of their ability will expand. While we can’t rule out the possibility that future AI tech might simply dominate at everything a human does, and leave us in the realm of comparative rather than absolute advantage, the latest crop of generative AI looks more like something that gives humans superpowers.

Some examples of how “autocomplete for everything” could work

One thing everyone wants to know when thinking about technology and the future of work is what they’ll actually be doing for a living in the future. It’s not enough for economists to just wave their hands and say “Oh, we’ll find something for people to do.” But although we can’t know for sure what the jobs of the future will look like, we can imagine how many of today’s creative jobs might change in the age of “autocomplete for everything”.

Take op-ed writers, for instance – an example that’s obviously important to Noah. Much of the task of nonfiction writing involves coming up with new ways to phrase sentences, rather than figuring out what the content of a sentence should be. AI-based word processors will automate this boring part of writing – you’ll just type what you want to say, and the AI will phrase it in a way that makes it sound comprehensible, fresh, and non-repetitive. Of course, the AI may make mistakes, or use phrasing that doesn’t quite fit a human writer’s preferred style, but this just means the human writer will go back and edit what the AI writes.

In fact, Noah imagines that at some point, his workflow will look like this: First, he’ll think about what he wants to say, and type out a list of bullet points. His AI word processor will then turn each of these bullet points into a sentence or paragraph, written in a facsimile of Noah’s traditional writing style. Noah will then go back and edit what the AI wrote – altering phrasing, adding sentences or phrases or links where appropriate, and so on. An iterative, collaborative writing loop where an AI coauthor masters different parts of the cognitive stack than Noah himself, not dissimilar to the co-writing of this article.

Many artists will likely have a similar workflow. Suppose you want to draw a painting of a space adventurer riding a giant rabbit on Mars. You’ll write (or speak) a prompt, and the AI will create a bunch of alternative pictures – perhaps adapted to your own art style, or that of a famous artist like Frank Frazetta. You’ll then select one of the alternatives and go to work on it. Maybe you’ll continue to prompt the AI to alter that image or riff on it. When you finally have something close to what you want, you’ll go in and change the details by hand – including cleaning up the hands, hair, or other little “edge cases” that the AI messes up.

Industrial design will work in a similar way. Take a look at any mundane, boring object in the room around you – a lamp, or a TV stand, or a coffee maker. Some human being had to come up with the design for that. With generative AI, the designer won’t have to look through pages and pages of examples to riff off of. They’ll just deliver a prompt – “55-inch TV stand with two cabinets” – and see a menu of alternative designs. They’ll pick one of the designs, refine it, and add any other touches they want.

We can imagine a lot of jobs whose workflows will follow a similar pattern – architecture, graphic design, or interior design. Lawyers will probably write legal briefs this way, and administrative assistants will use this technique to draft memos and emails. Marketers will have an idea for a campaign, generate copy en masse and provide finishing touches. Consultants will generate whole powerpoint decks with coherent narratives based on a short vision and then provide the details. Financial analysts will ask for a type of financial model and have an Excel template with data sources autofilled.

What’s common to all of these visions is something we call the “sandwich” workflow. This is a three-step process. First, a human has a creative impulse, and gives the AI a prompt. The AI then generates a menu of options. The human then chooses an option, edits it, and adds any touches they like.

The sandwich workflow is very different from how people are used to working. There’s a natural worry that prompting and editing are inherently less creative and fun than generating ideas yourself, and that this will make jobs more rote and mechanical. Perhaps some of this is unavoidable, as when artisanal manufacturing gave way to mass production. The increased wealth that AI delivers to society should allow us to afford more leisure time for our creative hobbies.

But even if people aren’t as artisanal in their jobs, that doesn’t mean humans will have to give up the practice of individual creativity; we’ll just do it for fun instead of for money. CAD software and machine tools haven’t taken the fun out of woodworking or metalworking – they just mean that people who enjoy these creative pursuits don’t get to combine their hobbies with their jobs. But that’s just capitalism – what we produce has always been dictated by the market, while our true expressive artisanal creation has always been done on our own time, and only a lucky few people have ever been able to combine the two.

Ultimately, though, we predict that lots of people will just change the way they think about individual creativity. Just as some modern sculptors use machine tools, and some modern artists use 3d rendering software, we think that some of the creators of the future will learn to see generative AI as just another tool – something that enhances creativity by freeing up human beings to think about different aspects of the creation.

So that’s our prediction for the near-term future of generative AI – not something that replaces humans, but something that gives them superpowers. A proverbial bicycle for the mind. Adjusting to those new superpowers will be a long, difficult trial-and-error process for both workers and companies, but as with the advent of machine tools and robots and word processors, we suspect that the final outcome will be better for most human workers than what currently exists.

https://noahpinion.substack.com/p/generative-ai-autocomplete-for-everything