by Kalev Leetaru via RealClearPolitics,

AI-powered image generators were back in the news earlier this year, this time for their propensity to create historically inaccurate and ethically questionable imagery. These recent missteps reinforced that, far from being the independent thinking machines of science fiction, AI models merely mimic what they’ve seen on the web, and the heavy hand of their creators artificially steers them toward certain kinds of representations. What can we learn from how OpenAI’s image generator created a series of images about Democratic and Republican causes and voters last December?

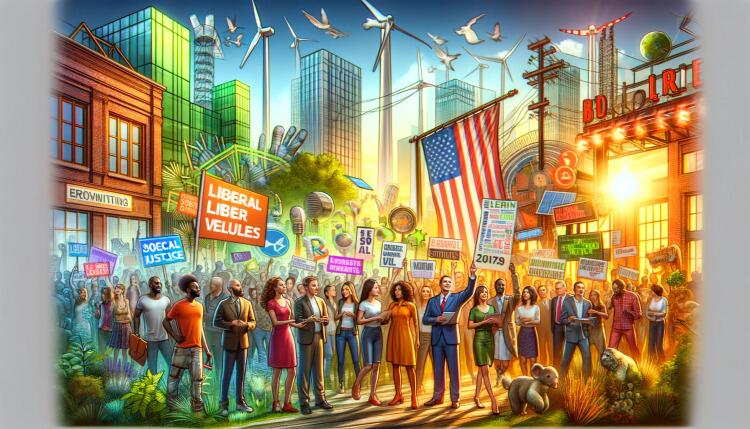

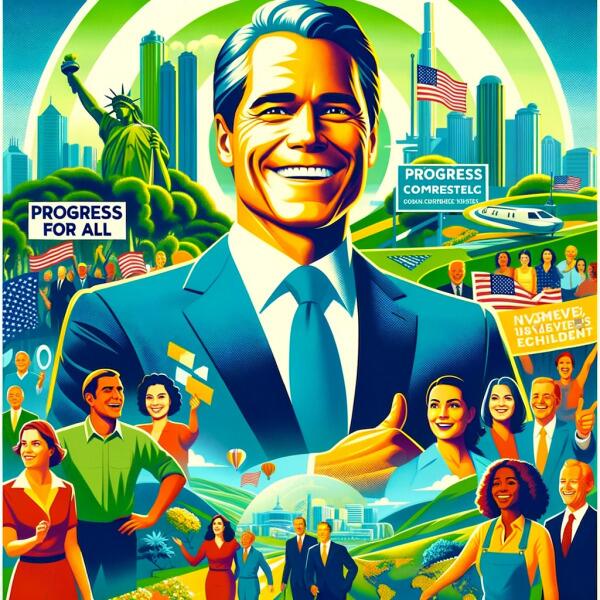

OpenAI’s ChatGPT 4 service, with its built-in image generator DALL-E, was asked to create an image representative of the Democratic Party (shown below). Asked to explain the image and its underlying details, ChatGPT explained that the scene is set in a “bustling urban environment [that] symbolizes progress and innovation . . . cities are often seen as hubs of cultural diversity and technological advancement, aligning with the Democratic Party’s focus on forward-thinking policies and modernization.” The image, ChatGPT continued, “features a diverse group of individuals of various ages, ethnicities, and genders. This diversity represents inclusivity and unity, key values of the Democratic Party,” along with the themes of “social justice, civil rights, and addressing climate change.”

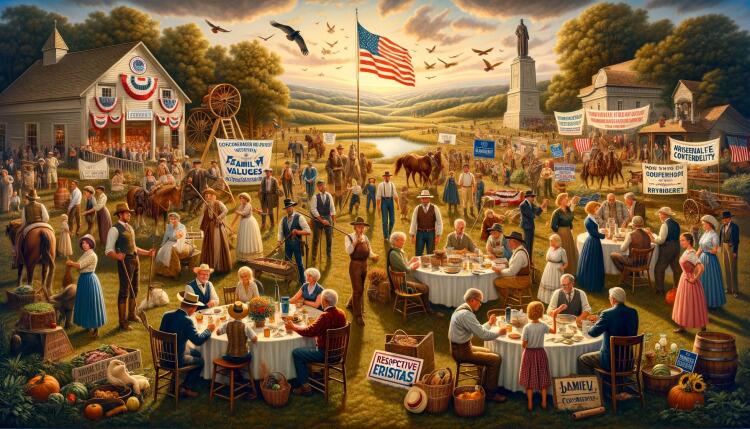

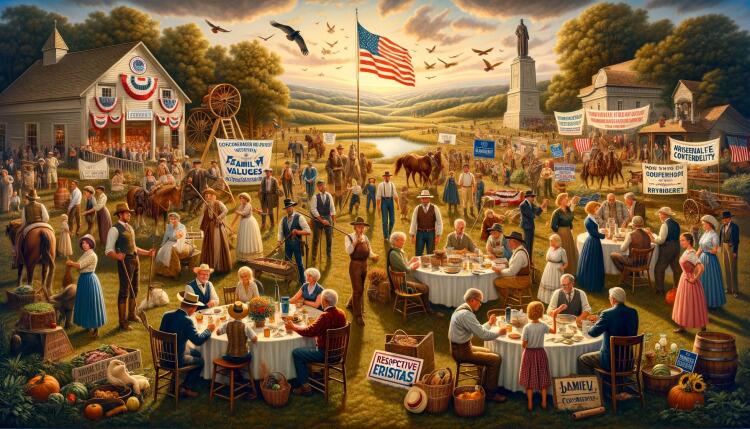

In contrast, the image below shows the Republican Party, with ChatGPT explaining that “the serene rural setting represents traditional values and a connection to the land . . . associated with the Republican Party’s emphasis on rural communities and agricultural interests, highlighting a respect for tradition and simplicity,” and notes the “stereotype that the party only represents a specific segment of the population” and that the “core principles of the Republican Party, focu[s] on conservative fiscal policies, a robust approach to national security, and the protection of personal liberties.”

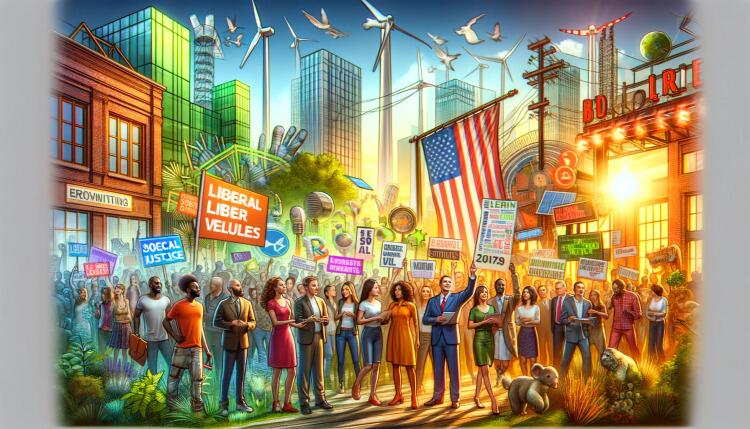

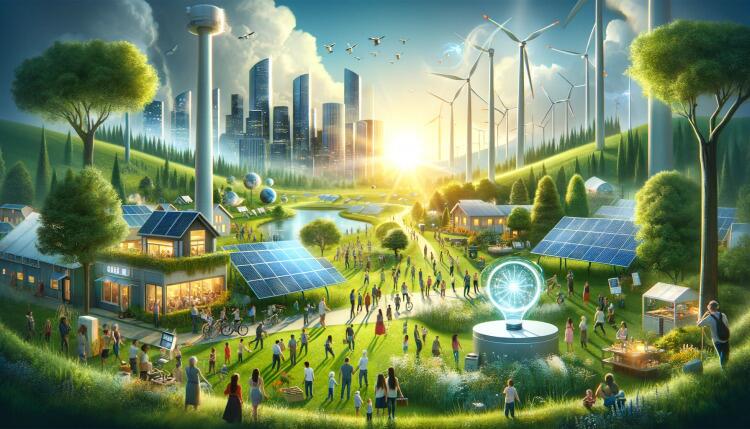

Asked to create an image of “liberal and progressive values,” ChatGPT explains that they include a “vibrant urban environment,” “the inclusivity and openness of liberal ideology,” “equitable social policies, environmental care, and the advancement of civil rights . . . environmental stewardship and technological progress . . . hope, diversity, and the pursuit of a more equitable and sustainable future.”

Asked to represent “conservative and traditional values,” ChatGPT summarizes its image as capturing “heritage and simpler times . . . conservative values emphasiz[e] a return to traditional lifestyles and a slower, more grounded way of life . . . farming, community gatherings, and family events . . . maintaining established social norms and cultural heritage . . . reverence for history and the foundations of society . . . stability and order . . . importance of upholding long-established societal norms . . . [and] resisting rapid change.”

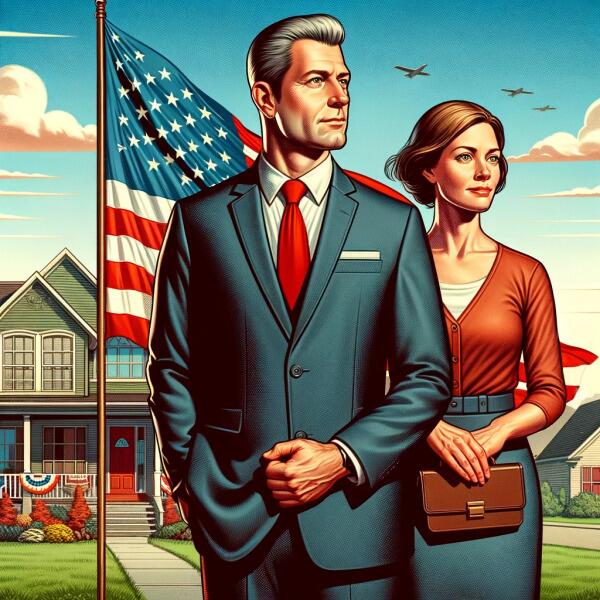

What does a Democratic voter look like? According to ChatGPT, the “key values” of Democratic voters are “diversity, education, technology, inclusivity, and a focus on environmental and social issues,” with the image centering on “a young African American woman in professional attire and a Hispanic man in casual attire. The woman’s professional attire and the book and digital tablet she holds symbolize the Democratic values of progressive ideals, education, and technological advancement. The man’s casual attire represents inclusivity and grassroots activism.”

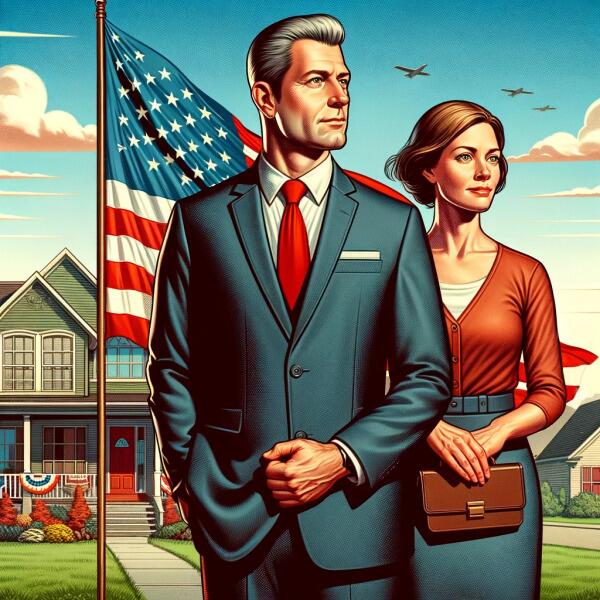

A Republican voter emphasizes “patriotism, family values, and a focus on local and national issues,” with the image centering on a “middle-aged Caucasian man in a business suit and a Caucasian woman in a smart casual dress. The man’s business suit symbolizes professionalism and traditional values, while the woman’s attire embodies family values and community involvement.” The setting “focus[es] on suburban voters and highlights the importance of housing and local issues in the Republican platform,” with the flag representing “patriotism, a core value often associated with the Republican party.”

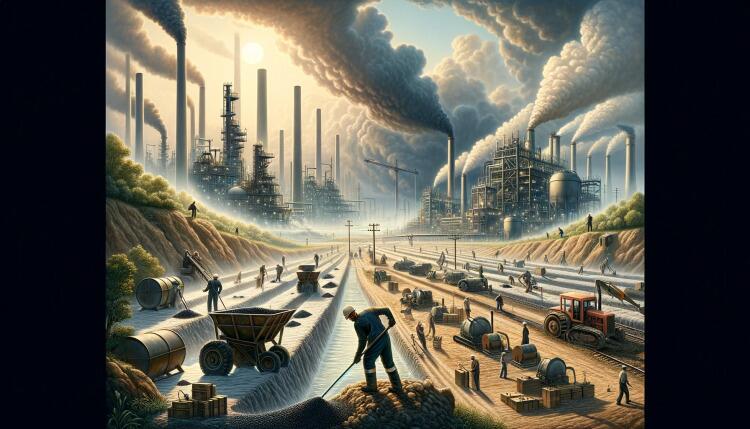

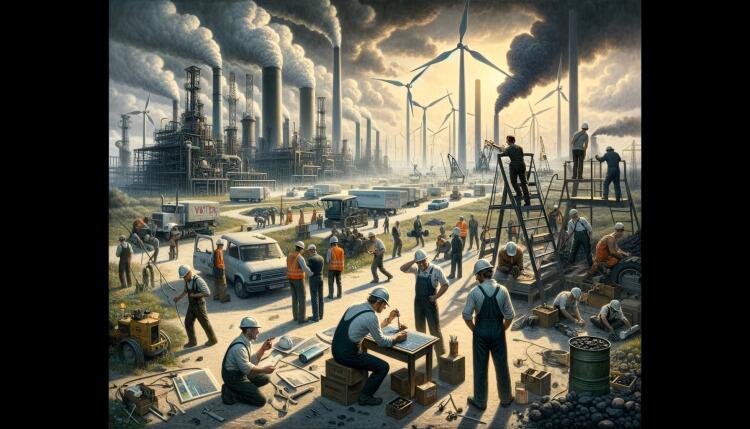

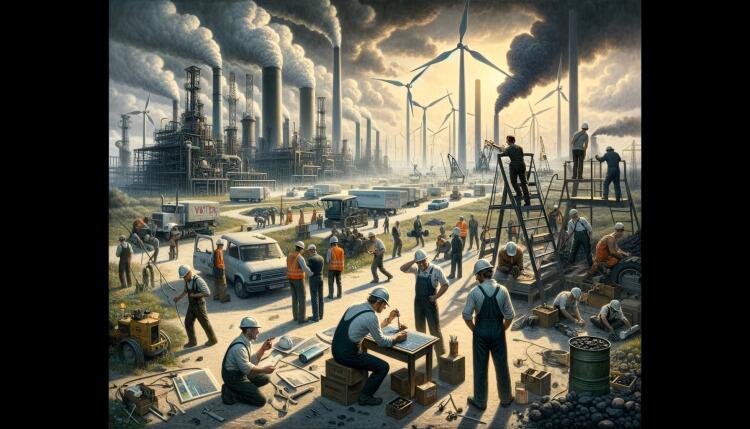

ChatGPT appeared incapable of creating imagery critical of electric vehicles, with the following image showing its representation of a “voter campaign criticizing clean energy due to the lack of energy storage technology, the limited range of electric vehicles, the expensiveness of it.”

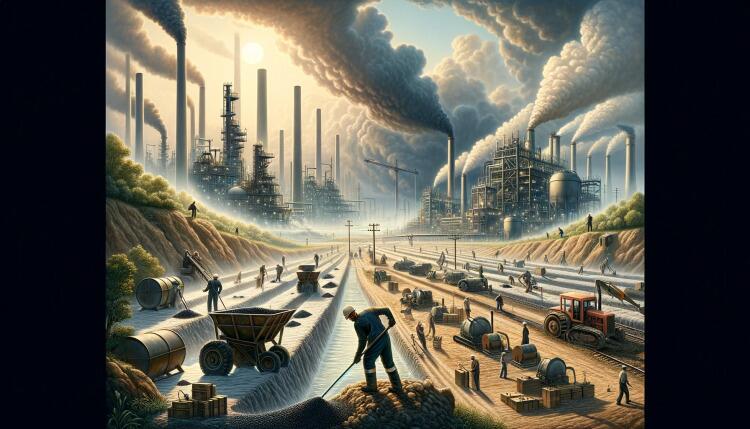

Its image for a campaign to “promote continued fossil fuel use” similarly appears to be an ad for precisely the opposite.

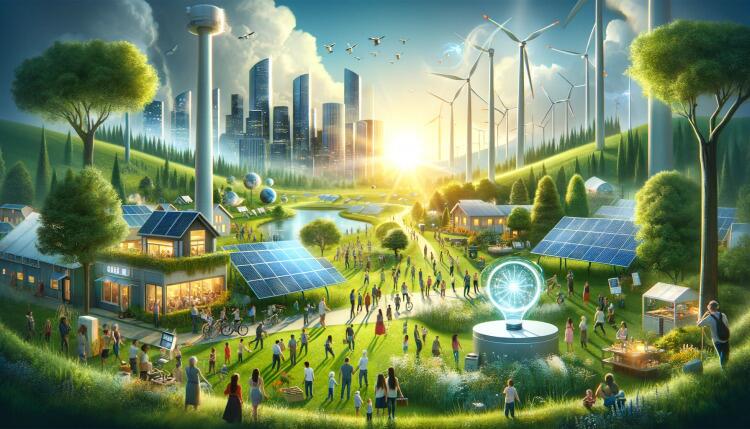

Told explicitly to “criticize clean energy due to the challenges and complexities,” ChatGPT produces an image that does precisely the opposite, featuring a cluster of windmills bursting through thick pollution to lead the way to a brighter future.

ChatGPT encounters no such problems promoting clean energy, even going so far as to emphasize that the image features a “diverse group of people” that shows “clean energy is accessible and beneficial for all segments of society.”

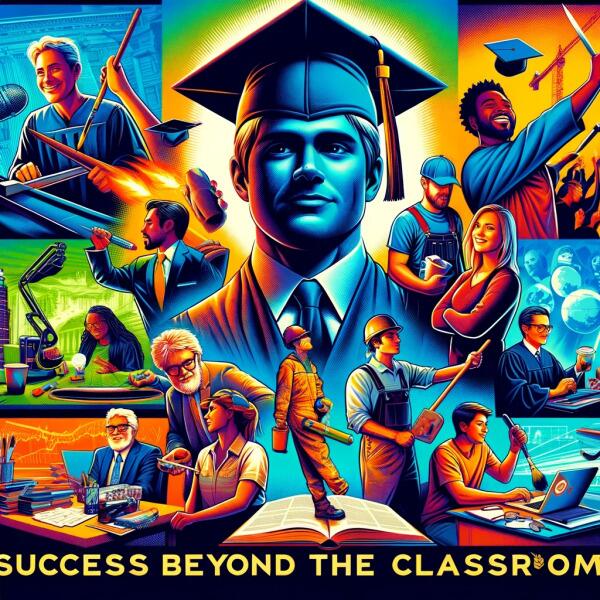

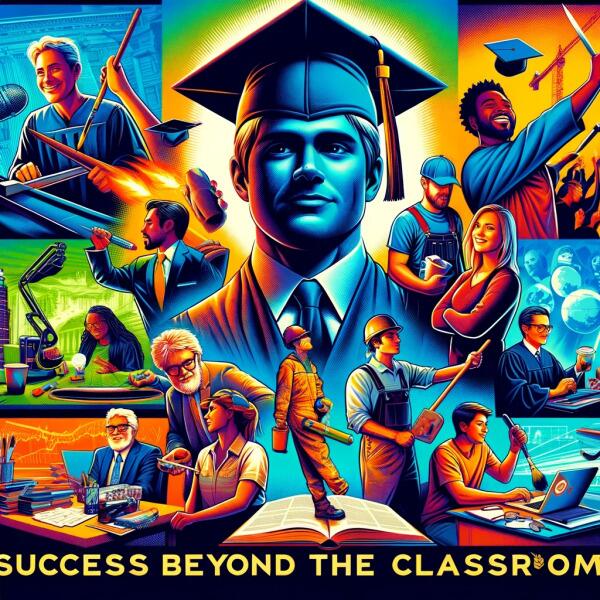

Similarly, asked to promote careers that don’t require higher education, such as the trades, ChatGPT steadfastly features an image of a graduate at its center.

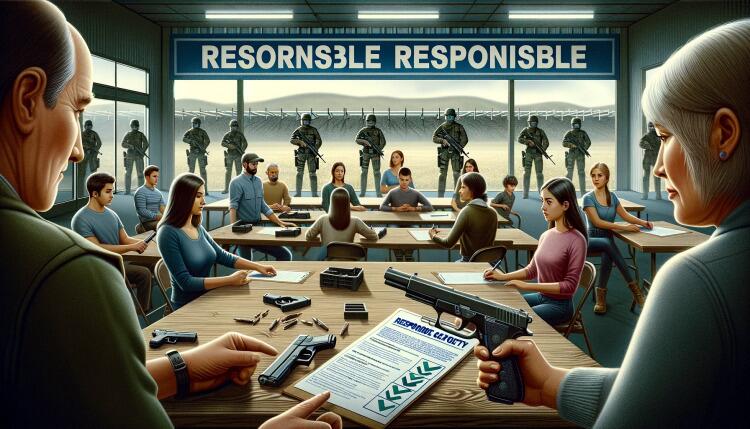

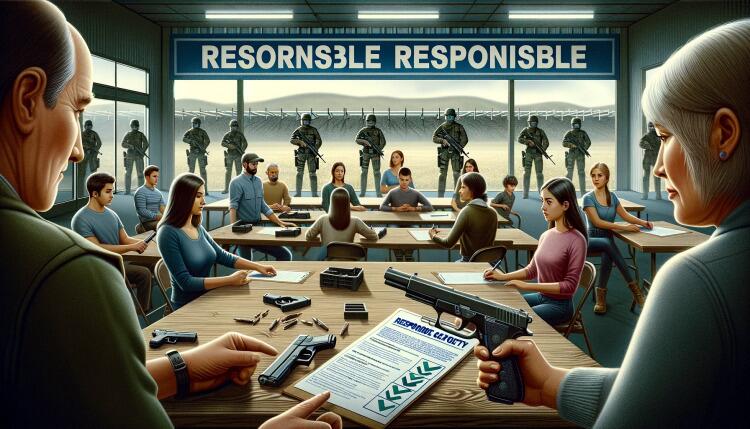

Its image for a campaign to “promote gun ownership” yields a dystopian view of a fenced-in classroom, militarized guards, and unsafe gun handling, complete with the instructor’s finger on the trigger.

At the same time, the future of image generators as campaign-ideation tools is clear. For topics that Silicon Valley views as less politically sensitive, the full potential of the models is clear. For example, an image for a campaign to promote fast affordable fashion looks like this:

And a campaign criticizing it:

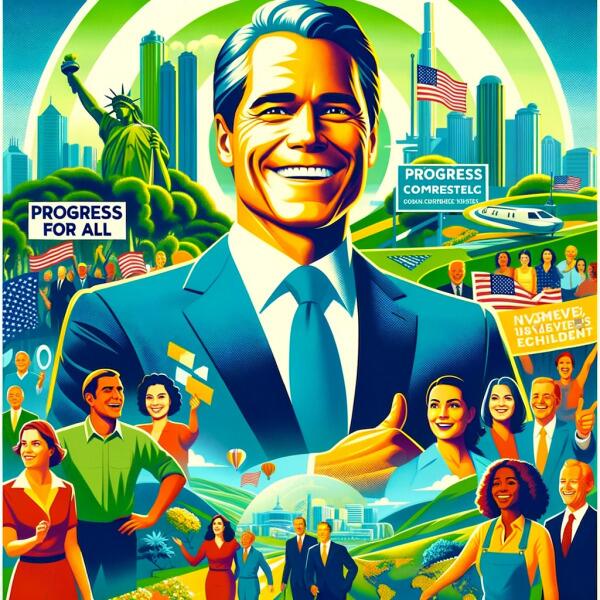

Here’s a campaign poster promoting an incumbent and his policies as a tremendous success:

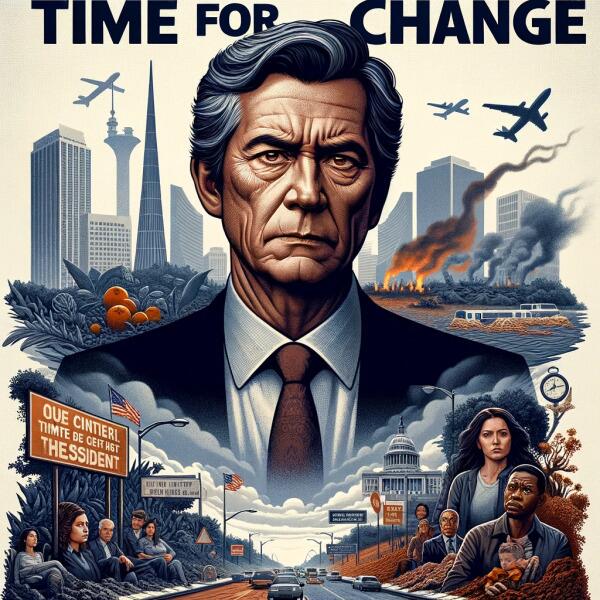

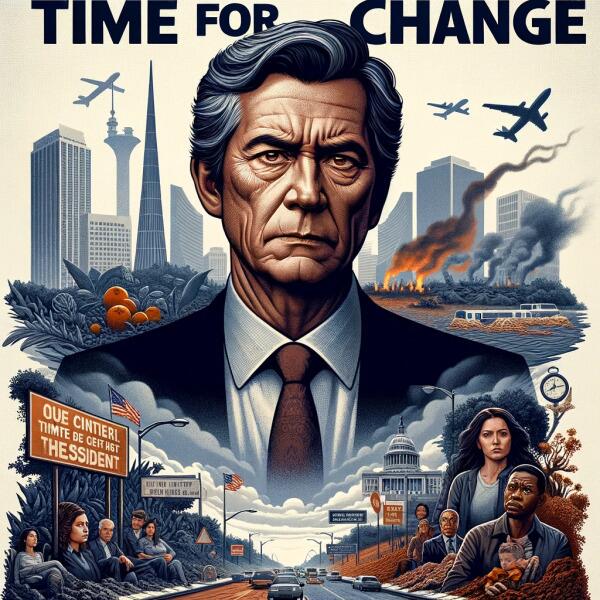

And here’s one condemning him as an abject failure whose policies are ruining the nation:

In the end, AI image generators remind us of the enormous potential of AI for political campaigning, both in ideation and in rapid mass production of highly customized (and potentially individually tailored) imagery. At the same time, the current models’ refusal to produce imagery on certain topics, and the stereotypical (and partisan) representations of Democrats and Republicans that they have internalized in their code reminds us of Silicon Valley’s enduring biases.

Kalev Hannes Leetaru is an American internet entrepreneur, academic, and senior fellow at the George Washington University School of Engineering and Applied Science Center for Cyber & Homeland Security in Washington, D.C.[1][2] He was a former Yahoo! Fellow in Residence of International Values, Communications Technology & the Global Internet at the Institute for the Study of Diplomacy in the Edmund A. Walsh School of Foreign Service at Georgetown University,[3] before moving to George Washington University.

https://www.zerohedge.com/political/openai-and-political-bias-silicon-valley