We often forget this but clinical trials are experiments on humans. To minimize risks to participants, trials have independent oversight boards that make recommendations on stopping trials early.

A typical example is when one group in the trial shows obviously better results. The group of experts judge whether it would be ethical to continue the trial—because if the effect is so much better in one arm of the trial, the other arm is getting inferior care.

The tension of course is that trials stopped early have fewer events. Fewer events increases the risk of seeing noise instead of signal. A fair coin might show many more tails when tossed only 10 times rather than 100 times.

I wanted to highlight today a classic paper on the risks of stopping trials early for benefit. I then add an example of a common medicine.

The stimulus for this post came from a review article by Sanjay Kaul and Javeed Butler wherein they discussed insights from the early stopping of 4 pivotal trials in chronic kidney disease. (I did not remember that the three SGLT2i trials and one GLP1a trial had been stopped early for benefit.)

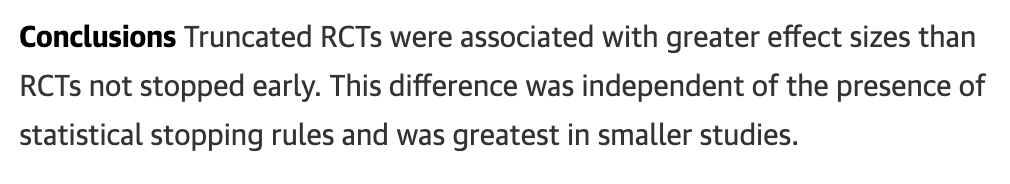

The Classic Paper by Bassler et al in JAMA

This group found 91 truncated trials (for benefit) that asked 63 different questions. They matched these trials against 424 similar but nontruncated trials. They found the nontruncated trials from systematic reviews.

They then compared the effect sizes of the two types of trials. And found that overall, effective sizes were 29% more favorable in truncated trials than nontruncated trials.

Basically, they divided the relative risk ratio for the truncated trials by the relative risk ratios of the nontruncated trials. This difference easily met statistical significance.

They also found that the largest difference between truncated and nontruncated trials occurred when the truncated trials had fewer than 500 outcome events. (This makes sense because the fewer the events, the greater the chance of finding noise.)

The final finding was that in more than half the comparisons (62%) the effect size in the nontruncated trials did not reach statistical significance. That is a big deal because every one of the 91 included trials were stopped early for benefit.

The authors concluded:

The Story of ASA for Primary Prevention of Cardiac Events

One of the trials included in Bassler’s analysis was the Physicians Health Study—a large randomized trial comparing aspirin to placebo for the prevention of cardiac events in patients without heart disease (aka - primary prevention).

The trial included 22K participants. An early look at the data revealed a reduction in total myocardial infarction. Hazard ratio was 0.53 with 95% confidence intervals 0.42-0.67. The p-value calculated at less than 0.00001. This prompted the study to be stopped early.

This was one of the trials that got aspirin use established as a preventive medicine. Though, in the final report, the reduction in MI did not lead to a reduction in total cardiovascular death.

In 2018, three modern trials comparing asa vs placebo for primary prevention were published. All were nonsignificant. Let me show you the one that most closely resembles the Physicians Health study. It was called ARRIVE.

In this study, more than 12,500 patients without heart disease were randomized to asa 100 mg per day or placebo. The six-year trial was not stopped.

The rate of the composite primary outcome of CV death, MI, unstable angina, stroke or transient attack was 4.29% with asa vs 4.48% with placebo. The tiny difference did not come close to reaching statistical difference.

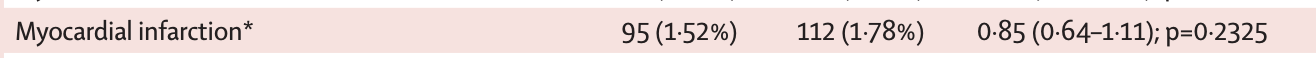

Since the Physicians Health Study was stopped due to lower MI rates, I will show you the MI results in ARRIVE:

The hazard ratio here was 0.85 or 15% better with aspirin—but it did not reach statistical significance.

So… If we did a Bassler-et-al type analysis for MI we would find a HR in the truncated Physicians Health study of 0.53 vs a HR of 0.85 in the nontruncated ARRIVE study.

The effect size for aspirin in the truncated trials was 38% greater than the nontruncated trial. (HR 0.53/HR 0.85 = HR - 0.62)

Caveats with the ASA example

I do not mean to imply that the all of the difference in the early positive asa trials vs the newer neutral asa trials was due to early termination.

Other factors that could explain the difference was that 20 years separated the trials. The ARRIVE authors explained the lack of asa benefit on the very low event rates. Indeed the event rates for MI were lower in the modern trial.

There have been many other changes (removal of transfats from the food, less smoking, more effective blood pressure drugs, etc) that could have reduced the effect of asa in the modern era.

But early termination for benefit in the Physicians Health study could have contributed to its large effect size for MI.

Teaching Points and Things to Think About

The main teaching point is that when you read that a trial is stopped early for efficacy, think about Bassler et al.

It doesn’t mean the trialists have done anything wrong. Ethics hold that you don’t want to expose patients to inferior treatment. But a trial stopped early has a decent chance of finding an effect size larger than a similar trial that was not stopped early.

The argument at hand is how much information we wish to obtain from trials. When we stop early, there are fewer events. In addition to accentuating effect size, fewer events also mean less chance of finding signals in subgroups of patients.

I don’t pretend to have answers on the perfect way to conduct trials. My point is to inform users of medical evidence to be mindful of the implications of early termination of trials.

Kaul and Butler’s paper that I linked to earlier is also an excellent resource.

John Mandrola is a heart rhythm doc, writer/podcaster for @Medscape, learner, cyclist, married to an #HPM doctor. #MedicalConservative.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.